Bridging the Gap in Road Assessments through AI

In an age where innovation is reshaping our daily lives, addressing the state of our infrastructure is becoming increasingly essential. For many, navigating the complexities of urban and rural road networks not only involves dodging potholes but also grappling with the awareness that many communities are under-equipped to maintain their roads effectively.

Traditional methods for assessing pavement quality have often relied on expensive equipment and specialized vehicles, limiting their application primarily to major highways and heavily trafficked routes. This leaves less-traveled streets vulnerable to deterioration and users without reliable data to inform road maintenance. Enter a cutting-edge solution developed by researchers at Carnegie Mellon University—a low-cost, AI-powered smartphone app that significantly enhances pavement condition assessments.

How the Technology Works

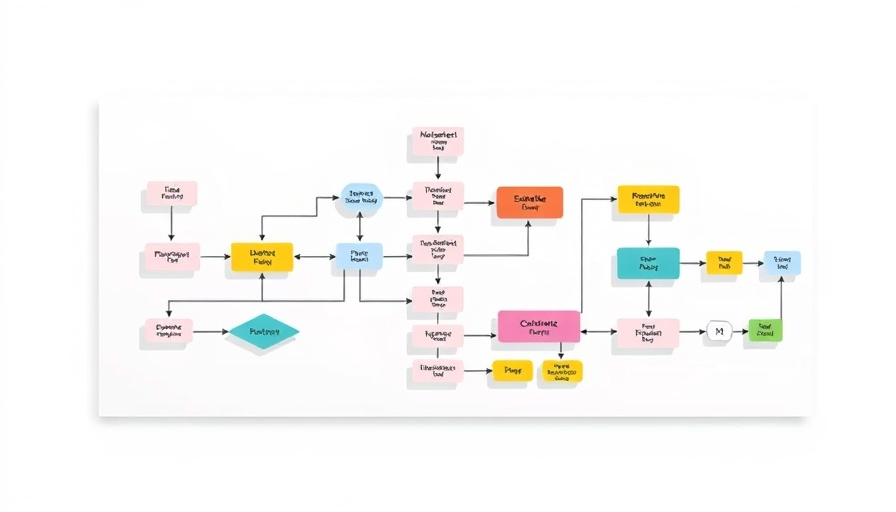

This innovative approach integrates machine learning with everyday technology. By utilizing a smartphone application designed by RoadBotics, combined with accessible open-source data encompassing weather patterns, traffic levels, and socioeconomic attributes, towns can predict pavement deterioration throughout their road networks. The app, when installed in regular vehicles, collects imagery of road conditions that AI models subsequently analyze to determine current states and forecast future deterioration.

Tao Tao, a postdoctoral researcher and lead author of the study, explained, "The method offers comprehensive coverage, enabling analysis of all road segments throughout the entire network and across multiple time scales." This means that cities can gain insights not just on current road conditions but also predictive analyses that can help in planning maintenance effectively.

Real-World Applications: Assessing Community Needs

In testing this app across nine diverse communities in the United States, the research demonstrated significant predictive capabilities in understanding pavement conditions. Unlike traditional assessments, this AI-driven technique could consider various factors, like climate patterns and local demographics, which might influence road wear differently in different neighborhoods.

The ramifications of this technology are wide-ranging. For small towns with limited budgets, it presents a cost-effective methodology for assessing road conditions without investing heavily in technology. Mid-sized cities benefit from the app's ability to prioritize preventative maintenance, addressing the roads most at risk and ensuring they allocate resources where they will have the greatest impact.

A Revolution in Infrastructure Management

For large urban areas, this tool could lead to more equitable infrastructure decisions, taking into account under-served neighborhoods, thus fostering a fairer distribution of maintenance resources. By leveraging readily available data combined with AI vitality from the app, public works departments are now in a position to respond proactively instead of reactively—addressing road issues before they escalate.

The Future of Road Assessment

As technology continues to evolve, the role of artificial intelligence in civil engineering and infrastructure maintenance will undoubtedly expand. The integration of such cost-effective solutions addresses a fundamental need in road assessment and provides a model that could be replicated in various contexts beyond just infrastructure, potentially impacting how communities approach maintenance and resource allocation.

With the public's safety and quality of life at stake, embracing this innovative technology represents a forward-thinking step towards modernizing our road assessment practices. Communities that leverage this AI-driven methodology will not only enhance road reliability but also demonstrate proactive engagement in infrastructure sustainability and improvement.

Add Row

Add Row  Add

Add

Write A Comment