Unlocking Cost-Effective LLM Workloads with Gemini CLI on GKE

The complexity and cost of deploying Large Language Model (LLM) workloads have often posed significant challenges for businesses and developers. However, Google Kubernetes Engine (GKE), in combination with Gemini CLI, provides an innovative solution aimed at streamlining this process, making it more accessible and cost-effective.

What Makes GKE an Ideal Platform?

Google Kubernetes Engine (GKE) simplifies the deployment of LLMs by offering the Inference Quickstart model, which drastically reduces the need for trial and error through its out-of-the-box manifests. By integrating the Gemini CLI, users can take advantage of data-driven recommendations on workload performance and cost, enhancing their deployment process to mere minutes instead of months.

Maximizing Performance and Minimizing Costs

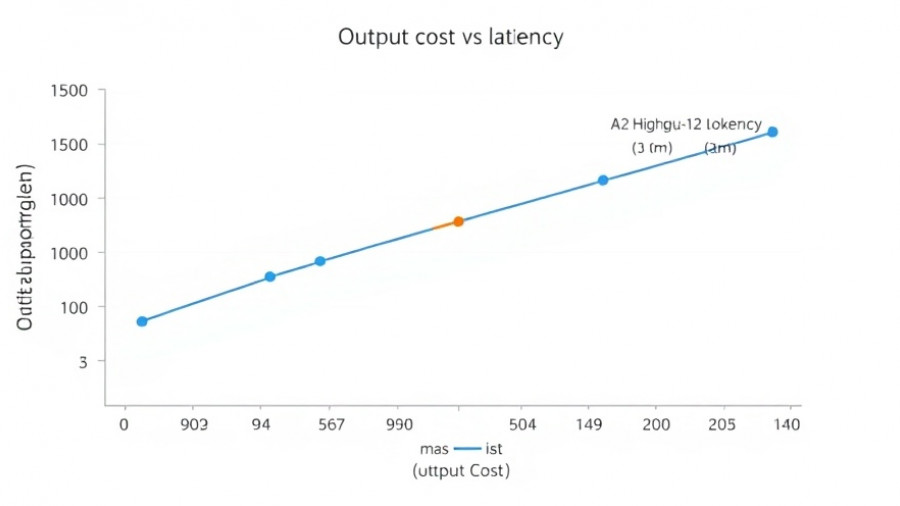

One of the key advantages of using the Inference Quickstart is its ability to help users balance performance with cost. As workloads increase in complexity, it’s critical to choose the right hardware. GKE provides insights into performance across various accelerators, while still maintaining cost efficiency. For example, achieving ultra-low latency with models like Gemma 3 can significantly increase operational costs, particularly due to inefficiencies in request batching.

Understanding Cost Per Token

When deploying models on GKE, understanding the cost per input/output token becomes essential. GKE’s Inference Quickstart uses a specific formula to account for accelerator costs by attributing the total expenses to both input and output tokens. This heuristic—where output tokens cost approximately four times more than input tokens—highlights the importance of evaluating the costs associated with different workloads. By leveraging the Gemini CLI, users can adjust this ratio to better fit their specific use cases, facilitating a much more refined financial strategy.

Learn to Optimize Your LLM Workloads

Achieving cost efficiency in LLM deployments is more than just about choosing a cheaper model; it involves understanding the underlying data and its implications on both performance and budget. GKE Inference Quickstart enables users to implement optimizations for storage and autoscaling, ensuring that their solutions are not only effective but also sustainable over time.

In today's competitive landscape of AI and machine learning, leveraging platforms like GKE with advanced tools such as Gemini CLI can provide a significant edge. By combining efficiency with personalized recommendations tailored to your specific requirements, businesses can make data-driven decisions that maximize output while minimizing costs. This innovative approach helps organizations navigate the complexities of artificial intelligence deployment more strategically.

Add Row

Add Row  Add

Add

Write A Comment